Blistering fast Mandelbrot rendering in Rust

- The Mandelbrot set

- The algorithm in pseudocode

- Naive implementation

- Smart ideas that I will not use

- Parallelism: AVX2 and Rayon

- Even more parallelism with OpenCL

- Footnotes

The Mandelbrot set

The Mandelbrot set is the set of complex numbers \(c\) for which the function \(f_c(z)=z^2+c\) does not escape to infinity when iterated starting from \(z=0\). In the classic multicolored rendering that everyone is familiar with each color represents how quickly the iteration diverged.

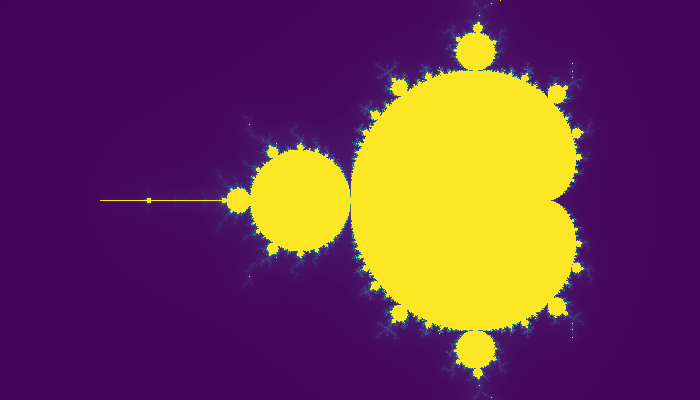

In this article I’ll show how we can render the image above1 in Rust using increasingly complex and faster approaches.

The algorithm in pseudocode

Obviously Wikipedia as an article on plotting algorithms for the Mandelbrot set. The basic algorithm is very straightforward:

for each pixel (Px, Py) on the screen:

x0 = scaled x coordinate to lie between -2.5 and 1

y0 = scaled y coordinate to lie between -1 and 1

x = 0.0

y = 0.0

iteration = 0

max_iteration = 1000

while (x*x + y*y <= 4 and iteration < max_iteration):

xtemp = x*x - y*y + x0

y = 2*x*y + y0

x = xtemp

itearation = iteration + 1

color = palette[iteration]

put color at coordinates Px, Py

Naive implementation

The naive implementation is a straight up porting of the code above:

use image;

use std::format;

use std::io::{self, Write};

use std::path::Path;

use std::time::Instant;

// Function to map the number of iterations i to a grey value between 0 (black)

// and 255 (white).

fn get_color(i: u32, max_iterations: u32) -> image::Rgb<u8> {

if i > max_iterations {

return image::Rgb([255, 255, 255]);

}

if max_iterations == 255 {

let idx = i as u8;

return image::Rgb([idx, idx, idx]);

}

let idx = (((i as f32) / (max_iterations as f32)) * 255.0).round() as u8;

return image::Rgb([idx, idx, idx]);

}

// Function to run a Mandelbrot rendering algorithm and measure its execution

// time.

// Arguments:

// name: name of the algorithm, it's used to print its name and save the output.

// w: width of the output image, in pixels.

// h: height of the output image, in pixels.

// save_image: if true, save the output of the algorithm to

// /tmp/mandelbrot_{name}.png

// algo: actual rendering algorithm that should take as inputs the width and

// height of the output image and returns an image::RgbImage

fn runalgo(name: &str, w: u32, h: u32, save_image: bool, algo: fn(u32, u32) -> image::RgbImage) {

print!("Executing {}... ", name);

io::stdout().flush().unwrap();

let now = Instant::now();

let img = algo(w, h);

let elapsed = now.elapsed().as_millis() as f32 / 1000.0;

if save_image {

let fname = format!("mandelbrot_{}.png", name);

img.save_with_format(Path::new("/tmp").join(fname), image::ImageFormat::Png)

.unwrap();

}

println!("{}s", elapsed);

}

// Render the Mandelbrot set. w and h are respectively the widht and height of

// the output image. Refer to

// https://en.wikipedia.org/wiki/Plotting_algorithms_for_the_Mandelbrot_set for

// a detailed explanation of this algorithm.

fn naive(w: u32, h: u32) -> image::RgbImage {

let mut img = image::RgbImage::new(w, h);

for c in 0..w {

let x0 = ((c as f32) / (w as f32)) * 3.5 - 2.5;

for r in 0..h {

let y0 = ((r as f32) / (h as f32)) * 2.0 - 1.0;

let mut x = 0.0;

let mut y = 0.0;

let mut iteration: u32 = 0;

while x * x + y * y <= 4.0 && iteration < MAX_ITERATIONS {

let xtemp = x * x - y * y + x0;

y = 2.0 * x * y + y0;

x = xtemp;

iteration = iteration + 1;

}

let rgb = get_color(iteration, MAX_ITERATIONS);

img.put_pixel(c, r, rgb);

}

}

img

}

fn main() {

let width = 14000;

let height = 8000;

let save_image = false;

runalgo("naive", width, height, save_image, naive);

}

Running this code generates the familiar Mandelbrot set:

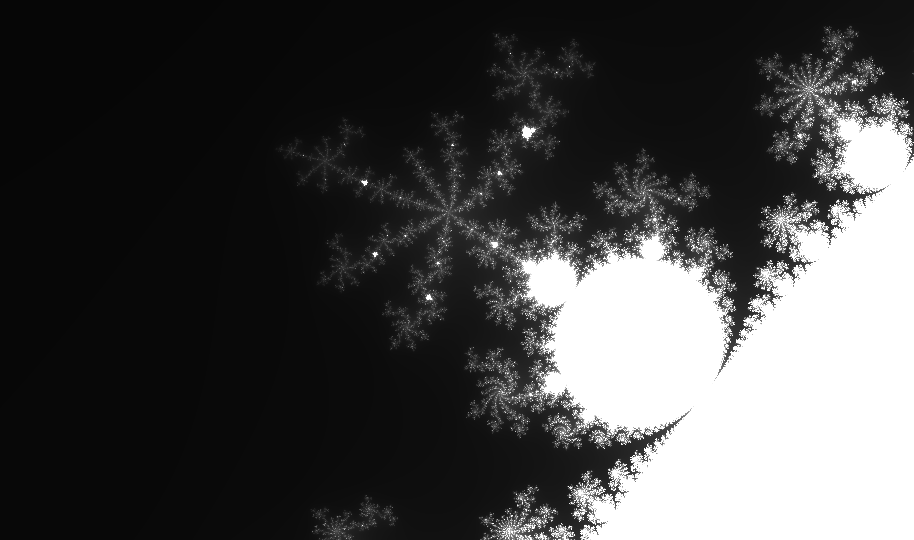

A detail of the image generated by the algorithm above.

A detail of the image generated by the algorithm above.

The output on the console on my 3.70GHz Intel i7-8700K is:

$ cargo build --release && ./target/release/mandelbrot

Compiling mandelbrot v0.1.0 (/home/gcardone/projects/mandelbrot)

Finished release [optimized + debuginfo] target(s) in 0.62s

Executing naive... 18.437s

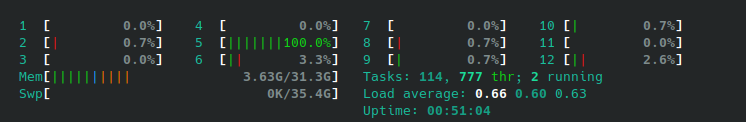

While that runs, htop shows the following CPU load:

Only one core is busy calculating precious Mandelbrot pixels, all the other cores are idling.

Only one core is busy calculating precious Mandelbrot pixels, all the other cores are idling.

I should run the algorithm several times to get a median or 95-ile running time, but it’s unlikely that it will suddenly take 2 seconds. Let’s see how it can be sped up.

Smart ideas that I will not use

There are several clever ideas to increase the speed of the algorithm above:

- with some algebraic manipulation it’s possible to reduce the number of multiplications in the inner loop from 6 to 3 (see here;

- exploit the fact that the Mandelbrot set is symmetric about the x-axis and compute values only in the upper part of the cartesian plane and flip it to render the lower part, but this works only for rendering the whole fractal, it’s not useful for a zoomed-in version;

- check if a point is within the main bulb of the set (see here

- check if a value reached while iterating a pixel has been reached before, i.e.: memoize the main algorithm

These options can all provide some speed ups. But let’s see if we can do better by simply applying some good ol’ brute force.

Parallelism: AVX2 and Rayon

The Mandelbrot algorithm is an embarrassingly parallel problem: the colour of each pixel can be calculated independently from all other pixels, therefore it can be trivially parallelized. I decided to parallelize the algorithm above in two ways: by using AVX2 extensions to compute several pixels in parallel using wide CPU registers, and Rayon to parallelize across all available cores.

The shortest AVX2 crash course ever

AVX2 is an extension to the x86 instruction set that provides wide registers containing several integers or floats, and matching instructions that can operate on all the values inside a register in parallel. For the scope of this article we need a very basic knowledge of how to write AVX2 code:

- the only datatypes that we’ll need are:

__m256: a 256-bit vector packing 8 single-precision floats;__m256i: a 256-bit vector packing 8 32-bit integers;

- AVX/AVX2 instruction names have the pattern

_mm<bit width>_<function>_<data type>. Intel’s intrinsics guide is a handy guide to all AVX instructions. For example__m256i _mm256_add_epi32 (__m256i a, __m256i b)adds packed 32-bit integers inaandb, and store the results indst.

Note that usinv AVX/AVX2/AVX-512 is not guaranteed to speed up your code, or to speed it up by a factor of 8x. For example Intel CPUs can downclock cores because AVX-512-heavy workloads can cause parts of the die to warm up unevenly2. In particular a machine that runs both AVX and non-AVX code at the same time might be negatively impacted, see Travis Downs’ analysis of Intel AVX-512 transitions, a cautionary tale from Cloudflare, and Daniel Lemire’s analysis. Anyway writing AVX2 for a Mandelbrot fractal sounds like fun, and it’s unlikely that a Fortune-100 company will rely on this code. So: whatevs. Let’s use AVX2 (only a very small subset of CPUs support AVX-512 and my CPU is not one of those).

I will heavily comment the AVX code above, but take a look to Code Project for a more verbose crash course.

The shortest Rust Rayon crash course ever pinky promise

Step 1: add to your Cargo.toml:

[dependencies]

rayon = "1.5"

Then add to the top of your .rs file use rayon::prelude::*; and replace every call to iter() with par_iter(). Rayon will automatically parallelize the execution of the iteration. That’s it. Shortest course ever.

Parallelizing the algorithm

Now, armed with Intel’s Intrinsics Guide, let’s parallelize the algorithm:

// Import rayon

use rayon::prelude::*;

// Import SIMD instructions

use std::arch::x86_64::*;

// ...

struct Pixel {

x: u32,

y: u32,

iterations: u32,

}

fn avxrayon(w: u32, h: u32) -> image::RgbImage {

let mut img = image::RgbImage::new(w as u32, h as u32);

// Conditional build only for x86_64, see

// https://doc.rust-lang.org/reference/conditional-compilation.html

#[cfg(target_arch = "x86_64")]

{

if is_x86_feature_detected!("avx2") {

unsafe {

// The closure process_column processes a column of the mandelbrot image and

// returns a vector of Pixels. Each pixel contains its coordinates and the

// number of iterations. The algorithm below is an exact implementation of the

// naive algorithm using AVX2 instructions. `c` is the column to process,

// that is the x coordinate.

let process_column = |c: u32| -> Vec<Pixel> {

let mut v = vec![];

let x0 = ((c as f32) / (w as f32)) * 3.5 - 2.5;

// Initialize ax0 as 8 packed single precision float initialized to x0.

let ax0 = _mm256_set1_ps(x0);

// r is the row to process, that is the y coordinate. We step by 8 because

// AVX2 packs 8 floats in a single register.

for r in (0..h).step_by(8) {

// Initialize ar with r, repeated 8 times...

let ar: __m256 = _mm256_set1_ps(r as f32);

// ... and then add 7, 6, ... 0 to the ar coordinates. This means that

// the floats packed in ay0 contain the coordinates of contiguous pixels

// along the y axis.

let mut ay0 =

_mm256_add_ps(_mm256_set_ps(7., 6., 5., 4., 3., 2., 1., 0.), ar);

// ay0 = (r / h) * 2 - 1

ay0 = _mm256_sub_ps(

_mm256_mul_ps(

_mm256_div_ps(ay0, _mm256_set1_ps(h as f32)),

_mm256_set1_ps(2.0),

),

_mm256_set1_ps(1.0),

);

// ax = 0

let mut ax = _mm256_set1_ps(0.0);

// ay = 0

let mut ay = _mm256_set1_ps(0.0);

// aiters contains the number of iterations for each pixel, initialized

// to 0.

let mut aiters = _mm256_set1_epi32(0);

// If a packed integer in amask is set to 1, then the iterator in aiters

// in the same position will be incremented. This allows us to repeat the

// core escape loop only if at least one of the pixels needs more iterations.

// If amask is all set to zero then we can bail out.

let mut amask = _mm256_set1_epi32(1);

for _ in 0..MAX_ITERATIONS {

// axtemp = x * x - y * y + x0

let axtemp = _mm256_add_ps(

_mm256_sub_ps(_mm256_mul_ps(ax, ax), _mm256_mul_ps(ay, ay)),

ax0,

);

// y = 2.0 * x * y + y0

// The "2.0 * x" multiplication has been replaced with a more efficient

// x + x.

ay = _mm256_add_ps(

_mm256_mul_ps(_mm256_add_ps(ax, ax), ay),

ay0,

);

ax = axtemp;

// Increase all the iterations if the matching mask is set to 1.

aiters = _mm256_add_epi32(aiters, amask);

// threshold = x * x + y * y

let athreshold =

_mm256_add_ps(_mm256_mul_ps(ax, ax), _mm256_mul_ps(ay, ay));

// Compare the values in athreshold with 4.0, and store 0xFFFFFFFF in

// acond if the condition is true, 0 otherwise.

let acond = _mm256_cmp_ps(athreshold, _mm256_set1_ps(4.0), _CMP_LE_OQ);

// Do a logical and between amask and the acond. This means that each

// packed integer in amask will be set to zero if x * x + y * y > 4.0.

amask = _mm256_and_si256(amask, _mm256_castps_si256(acond));

// If amask contains only bits set to zero, then we don't need to keep

// iterating.

let breakthreshold: i32 =

_mm256_testz_si256(amask, _mm256_set1_epi32(-1));

if breakthreshold == 1 {

break;

}

}

// Unpack the iteration values in a rust old-fashioned array

let mut iters_unpacked = [0i32; 8];

_mm256_maskstore_epi32(&mut iters_unpacked[0], _mm256_set1_epi32(-1), aiters);

// Store the result of the computation in a vector

for (count, ir) in iters_unpacked.iter().enumerate() {

v.push(Pixel{x: c, y: r + count as u32, iterations: *ir as u32});

}

}

v

};

// Use rayon to parallelize the execution of the code above across all available

// cores and collect the results.

let vecs: Vec<Vec<Pixel>> =

(0..w).into_par_iter().map(|c| process_column(c)).collect();

for column_result in vecs.iter() {

for item in column_result.iter() {

img.put_pixel(item.x, item.y, get_color(item.iterations, MAX_ITERATIONS))

}

}

}

}

}

img

}

fn main() {

// …

runalgo("avxrayon", width, height, save_image, avxrayon);

}

Let’s run this version!

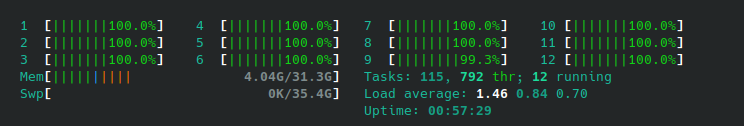

htop looks like this:

Look at that! All cores are crunching numbers!

Look at that! All cores are crunching numbers!

$ cargo build --release && ./target/release/mandelbrot

Compiling mandelbrot v0.1.0 (/home/gcardone/projects/mandelbrot)

Finished release [optimized] target(s) in 1.04s

Executing avxrayon... 6.668s

The results are… a bit underwhelming actually! 6.668s is only a 2.76x speedup over the naive implementation. I was not expecting a perfect 8x speedup, but this is sad.

I have some theories on why this might be happening:

- perhaps the generated code is not inlining enough (I tried adding

lto = truetoCargo.tomlbut it didn’t help); - probably the generated code is not as efficient as handcrafted assembly;

- perhaps achieving a 8x speedup with vectorization intrinsics (i.e.: all the

__mm<foo> functions) requires being more careful when juggling variables; - perhaps the code does not fit in the micro-op cache, which causes them to be decoded more often than necessary;

- apparently some Intel CPUs apply some power saving algorithm and turn off the most expensive computing units when they’re not in use, so maybe performances would improve if I let the code run for longer;

- I wrote the code above to be readable rather than being optimized, which may cause some performance hits. For example, AVX instructions can be non-destructive (e.g.:

C = A + B) and destructive (e.g.:A = A + B). Mixing the two causes pipeline stalls and code slowdowns.

So, I need to analyze this more to understand how to speed it up. But until then, let’s try something different to make the code go faster.

Even more parallelism with OpenCL

OpenCL is a framework for writing code that can compiled to run on CPUs, GPUs, and other hardware accelerators. OpenCL’s programming model is not trivial, but to render a Mandelbrot set we need very few concepts:

- OpenCL programs are formed by units of code called kernels;

- kernels are often written in OpenCL C, which is based on C99;

- OpenCL programs get compiled on the host system and then executed on the hardware accelerator - which in my case is my GPU;

- kernels operate over an N-dimensional data structure, which in the case of an image would be 2-dimensional;

- each item in the N-dimensional data structure is called work-item, and a copy of the kernel running a single processing element.

OpenCL is available in Rust with ocl. To use ocl add to Cargo.toml

[dependencies]

ocl = "0.19"

Armed with this knowledge, here is how to implement the Mandelbrot algorithm:

fn opencl(w: u32, h: u32) -> image::RgbImage {

let mut img = image::RgbImage::new(w as u32, h as u32);

// This is the kernel that we will be executed by each worker:

// buffer: output buffer where to store the number of iterations before the f(c) diverges.

// Accessing this memory is expensive, so it should be used sparingly. Since the

// output buffer is 1-dimensional, we will need to flatten the output by mapping

// a pixel at coordinates (r, c) to (r * width + c).

// width: image width.

// height: image height.

// max_iterations: maximum number of iterations of f(c).

let src = r#"

__kernel void mandelbrot(__global uint* buffer, uint width, uint height, uint max_iterations) {

// Get the x coordinate of this worker. We can get x and y coordinates because the kernel

// operates over a 2-dimensional data structure, as specified in the Rust code below.

int c = get_global_id(0);

// Get the y coordinate of this worker.

int r = get_global_id(1);

// The code below is an almost line-by-line port of the naive implementation, which has

// been optimized to have only 3 multiplications in the inner loop.

float x0 = ((float)c / width) * 3.5 - 2.5;

float y0 = ((float)r / height) * 2.0 - 1.0;

float x = 0.0;

float y = 0.0;

float x2 = 0.0;

float y2 = 0.0;

uint iteration = 0;

while (((x2 + y2) <= 4.0) && (iteration < max_iterations)) {

y = (x + x) * y + y0;

x = x2 - y2 + x0;

x2 = x * x;

y2 = y * y;

iteration = iteration + 1;

}

// Store the number of iterations computed by this worker.

buffer[width * r + c] = iteration;

}

"#;

// Build an OpenCL context, make it run the OpenCL C code defined above, and set the

// data structure to operate on as a 2-dimensional w by h structure.

let pro_que = ProQue::builder().src(src).dims((w, h)).build().unwrap();

// Let buffer be the output buffer accessible by workers. This memory lives on the

// hardware accelerator (e.g.: the GPU).

let buffer = pro_que.create_buffer::<u32>().unwrap();

// Build the OpenCL program, make it run the kernel called `mandelbrot` and bind actual

// values to the arguments of `mandelbrot`.

let kernel = pro_que

.kernel_builder("mandelbrot")

.arg(&buffer)

.arg(w)

.arg(h)

.arg(MAX_ITERATIONS as u32)

.build()

.unwrap();

// Run the OpenCL kernel

unsafe { kernel.enq().unwrap() };

let mut vec = vec![0u32; buffer.len()];

// Copy the OpenCL buffer back to a traditional Vec.

buffer.read(&mut vec).enq().unwrap();

for (idx, iteration) in vec.iter().enumerate() {

let rgb = get_color(*iteration, MAX_ITERATIONS);

let x = idx as u32 % w;

let y = idx as u32 / w;

img.put_pixel(x, y, rgb);

}

img

}

For reference my GPU is a Nvidia RTX 2080. Let’s run this version!

$ cargo build --release && ./target/release/mandelbrot

Compiling mandelbrot v0.1.0 (/home/ippatsuman/projects/mandelbrot)

Finished release [optimized] target(s) in 6.98s

Executing opencl... 0.816s

This is a much nicer improvement: 22.5x compared to the naive version for code that is way less complicated than the one based on AVX2.

Using OpenCL incurs in some fixed costs: simply compiling the kernel and transferring on the GPU takes non-zero time (about 200ms on my machine), so the speed up rate increases for larger images.

Trying to render a 56000 x 32000 image results in:

Executing naive... 314.635s

Executing avxrayon... 119.919s

Executing opencl... 9.468s

That’s a 2.6x speedup for AVX2+Rayon, and 33.2x for OpenCL.

These are nice results, especially the OpenCL version, but honestly I was expecting the accelerated code to be faster.

I suppose that I have no choice but to re-implement all of this in C/C++ and compare the running time and resulting assembly.

Hold my compiler, I’m going in!

Footnotes

In this rendering I used matplotlib’s viridis color palette. ↩︎

https://en.wikichip.org/wiki/intel/frequency_behavior ↩︎